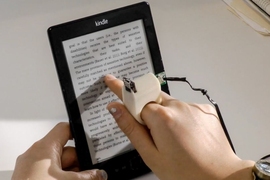

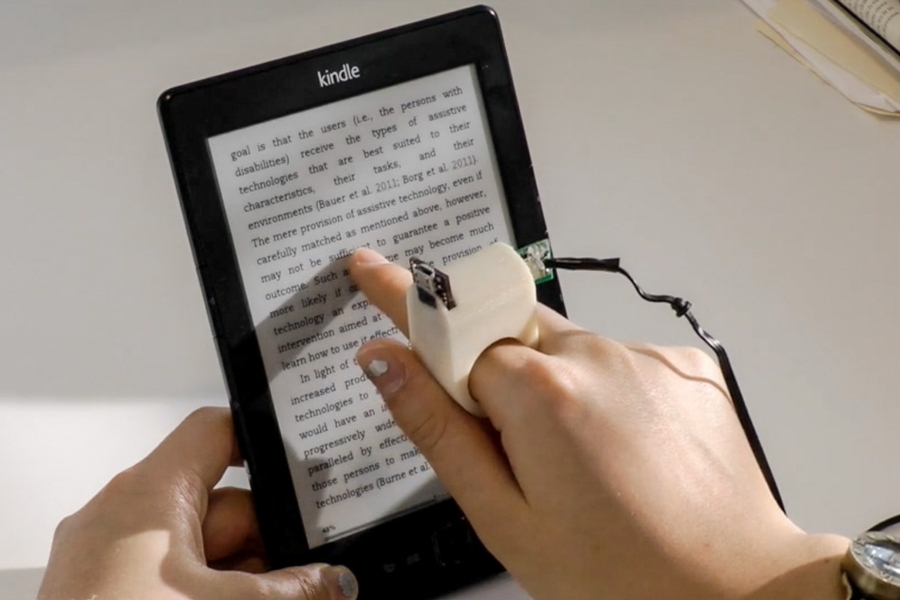

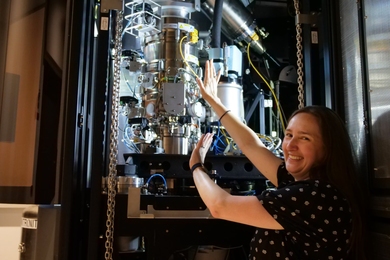

Researchers at the MIT Media Laboratory have built a prototype of a finger-mounted device with a built-in camera that converts written text into audio for visually impaired users. The device provides feedback — either tactile or audible — that guides the user’s finger along a line of text, and the system generates the corresponding audio in real time.

“You really need to have a tight coupling between what the person hears and where the fingertip is,” says Roy Shilkrot, an MIT graduate student in media arts and sciences and, together with Media Lab postdoc Jochen Huber, lead author on a new paper describing the device. “For visually impaired users, this is a translation. It’s something that translates whatever the finger is ‘seeing’ to audio. They really need a fast, real-time feedback to maintain this connection. If it’s broken, it breaks the illusion.”

Huber will present the paper at the Association for Computing Machinery’s Computer-Human Interface conference in April. His and Shilkrot’s co-authors are Pattie Maes, the Alexander W. Dreyfoos Professor in Media Arts and Sciences at MIT; Suranga Nanayakkara, an assistant professor of engineering product development at the Singapore University of Technology and Design, who was a postdoc and later a visiting professor in Maes’ lab; and Meng Ee Wong of Nanyang Technological University in Singapore.

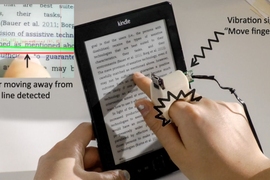

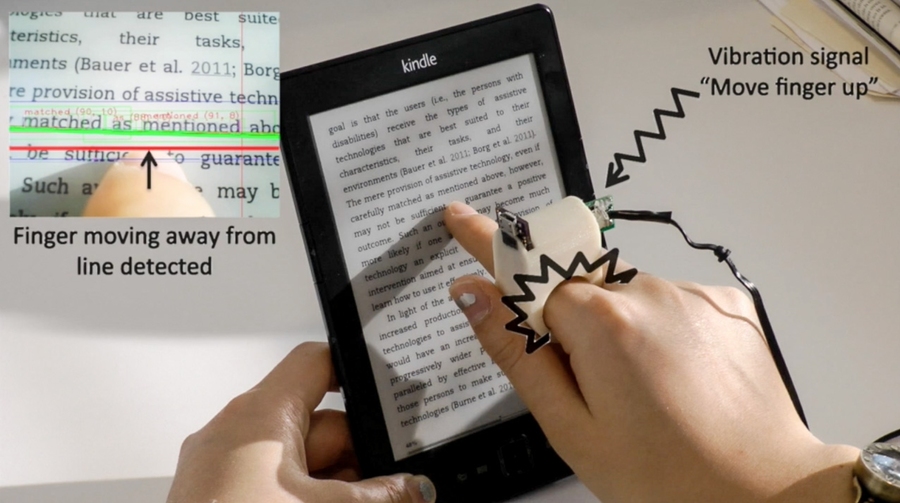

The paper also reports the results of a usability study conducted with vision-impaired volunteers, in which the researchers tested several variations of their device. One included two haptic motors, one on top of the finger and the other beneath it. The vibration of the motors indicated whether the subject should raise or lower the tracking finger.

Another version, without the motors, instead used audio feedback: a musical tone that increased in volume if the user’s finger began to drift away from the line of text. The researchers also tested the motors and musical tone in conjunction. There was no consensus among the subjects, however, on which types of feedback were most useful. So in ongoing work, the researchers are concentrating on audio feedback, since it allows for a smaller, lighter-weight sensor.

Bottom line

The key to the system’s real-time performance is an algorithm for processing the camera’s video feed, which Shilkrot and his colleagues developed. Each time the user positions his or her finger at the start of a new line, the algorithm makes a host of guesses about the baseline of the letters. Since most lines of text include letters whose bottoms descend below the baseline, and because skewed orientations of the finger can cause the system to confuse nearby lines, those guesses will differ. But most of them tend to cluster together, and the algorithm selects the median value of the densest cluster.

That value, in turn, constrains the guesses that the system makes with each new frame of video, as the user’s finger moves to the right, which reduces the algorithm’s computational burden.

Given its estimate of the baseline of the text, the algorithm also tracks each individual word as it slides past the camera. When it recognizes that a word is positioned near the center of the camera’s field of view — which reduces distortion — it crops just that word out of the image. The baseline estimate also allows the algorithm to realign the word, compensating for distortion caused by oddball camera angles, before passing it to open-source software that recognizes the characters and translates recognized words into synthesized speech.

In the work reported in the new paper, the algorithms were executed on a laptop connected to the finger-mounted devices. But in ongoing work, Marcel Polanco, a master’s student in computer science and engineering, and Michael Chang, an undergraduate computer science major participating in the project through MIT’s Undergraduate Research Opportunities Program, are developing a version of the software that runs on an Android phone, to make the system more portable.

The researchers have also discovered that their device may have broader applications than they’d initially realized. “Since we started working on that, it really became obvious to us that anyone who needs help with reading can benefit from this,” Shilkrot says. “We got many emails and requests from organizations, but also just parents of children with dyslexia, for instance.”

“It’s a good idea to use the finger in place of eye motion, because fingers are, like the eye, capable of quickly moving with intention in x and y and can scan things quickly,” says George Stetten, a physician and engineer with joint appointments at Carnegie Mellon’s Robotics Institute and the University of Pittsburgh’s Bioengineering Department, who is developing a finger-mounted device that gives visually impaired users information about distant objects. “I am very impressed with what they do.”