MIT.nano has announced its next round of seed grants to support hardware and software research related to sensors, 3D/4D interaction and analysis, augmented and virtual reality (AR/VR), and gaming. The grants are awarded through the MIT.nano Immersion Lab Gaming Program, a four-year collaboration between MIT.nano and NCSOFT, a digital entertainment company and founding member of the MIT.nano Consortium.

“We are pleased to be able to continue supporting research at the crossroads of the physical and the digital thanks to this collaboration with NCSOFT,” says MIT.nano Associate Director Brian W. Anthony, who is also principal research scientist in mechanical engineering and the Institute for Medical Engineering and Science. “These projects are just a few examples of the ways MIT researchers are exploring how new technologies could change how humans interact with the world and with each other.”

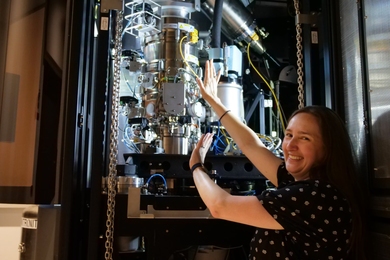

The MIT.nano Immersion Lab is a two-story immersive space dedicated to visualizing, understanding, and interacting with large data and synthetic environments. Outfitted with equipment and software tools for motion capture, photogrammetry, and 4D experiences, and supported by expert technical staff, this open-access facility is available for use by any MIT student, faculty, or researcher, as well as external users.

This year, three projects have been selected to receive seed grants:

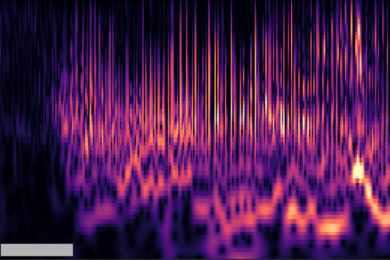

Ian Condry: Innovations in spatial audio and immersive sound

Professor of Japanese Culture and Media Studies Ian Condry is exploring spatial sound research and technology for video gaming. Specifically, Condry and co-investigator Philip Tan, research scientist and creative director at the MIT Game Lab, hope to develop software to add “the roar of the crowd” to online gameplay and e-sports so that players and spectators can hear and participate in the sound.

Condry and Tan will use the MIT Spatial Sound Lab’s object-based mixing technology, paired with the tracking and playback capabilities of the Immersion Lab, to gather data and compare various approaches to immersive audio. The two see the project possibly leading to fully immersive “in real life” gaming experiences with 360-degree video, or blended gaming in which online and in-person players can be present at the same event and interact with the performers.

Robert Hupp: Immersive athlete-training technology and data-driven coaching support in fencing

Seeking to improve athlete training, practice, and coaching experience to maximize learning while minimizing injury risk, MIT Assistant Coach Robert Hupp aims to advance fencing pedagogy through extended reality (XR) technology and biomechanical data.

Hupp, who has been working with MIT.nano Immersion Lab staff, says the preliminary data suggest that technology-assisted self-paced practice can make a fencer’s movements more compact, and that practicing in an immersive environment can improve responsive techniques. He spoke about data-driven coaching support and athlete training at an MIT.nano IMMERSED seminar in September 2021.

With this seed grant, Hupp plans to develop an immersive training system for self-paced athlete learning, create a biofeedback system to support coaches, conduct scientific studies to track an athlete’s progress, and advance current understanding of opponent interaction. He envisions the work having an impact in athletics, biomechanics, and physical therapy, and that using XR technology for training could expand to other sports.

Jeehwan Kim: Next-generation human/computer interface for advanced AR/VR gaming

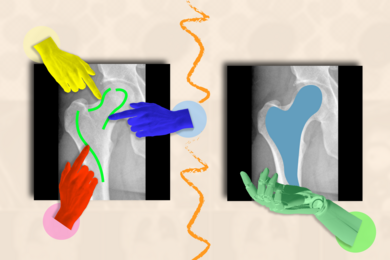

The most widely used user interaction methods for AR/VR gaming are gaze and motion tracking. However, according to associate professor of mechanical engineering Jeehwan Kim, current state-of-the-art devices fail to provide truly immersive AR/VR experiences due to their limitations in size, power consumption, perceptibility, and reliability.

Kim, who is also an associate professor in materials science and engineering, proposes a microLED/pupillary dilation (PD)-based gaze tracker and electronic, skin-based, controller-free motion tracker for next-generation AR/VR human computer interface. Kim’s gaze tracker is more compact and consumes less energy than conventional trackers. It can be integrated into see-through displays and could be used to develop compact AR glasses. The e-skin motion tracker can imperceptibly adhere to human skin and accurately detect human motions, which Kim says will facilitate more natural human interaction with AR/VR.

This is the third year of seed grant awards from the MIT.nano Immersion Lab Gaming Program. In the program’s first two calls for proposals in 2019 and 2020, 12 projects from five departments were awarded $1.5 million of combined research funding. The collaborative proposal selection process by MIT.nano and NCSOFT ensures the awarded projects are developing industrially-impactful advancements, and that MIT researchers are exposed to technical partners at NCSOFT during duration of the seed grants.